Few prior works study deep learning on point sets. PointNet by Qi et al. is a pioneer in this direction. However, by design PointNet does not capture local structures induced by the metric space points live in, limiting its ability to recognize fine-grained patterns and generalizability to complex scenes. In this work, they introduce a hierarchical neural network that applies PointNet recursively on a nested partitioning of the input point set. By exploiting metric space distances, our network is able to learn local features with increasing contextual scales. With further observation that point sets are usually sampled with varying densities, which results in greatly decreased performance for networks trained on uniform densities, we propose novel set learning layers to adaptively combine features from multiple scales. Experiments show that our network called PointNet++ is able to learn deep point set features efficiently and robustly. In particular, results significantly better than state-of-the-art have been obtained on challenging benchmarks of 3D point clouds.

1. Architecture

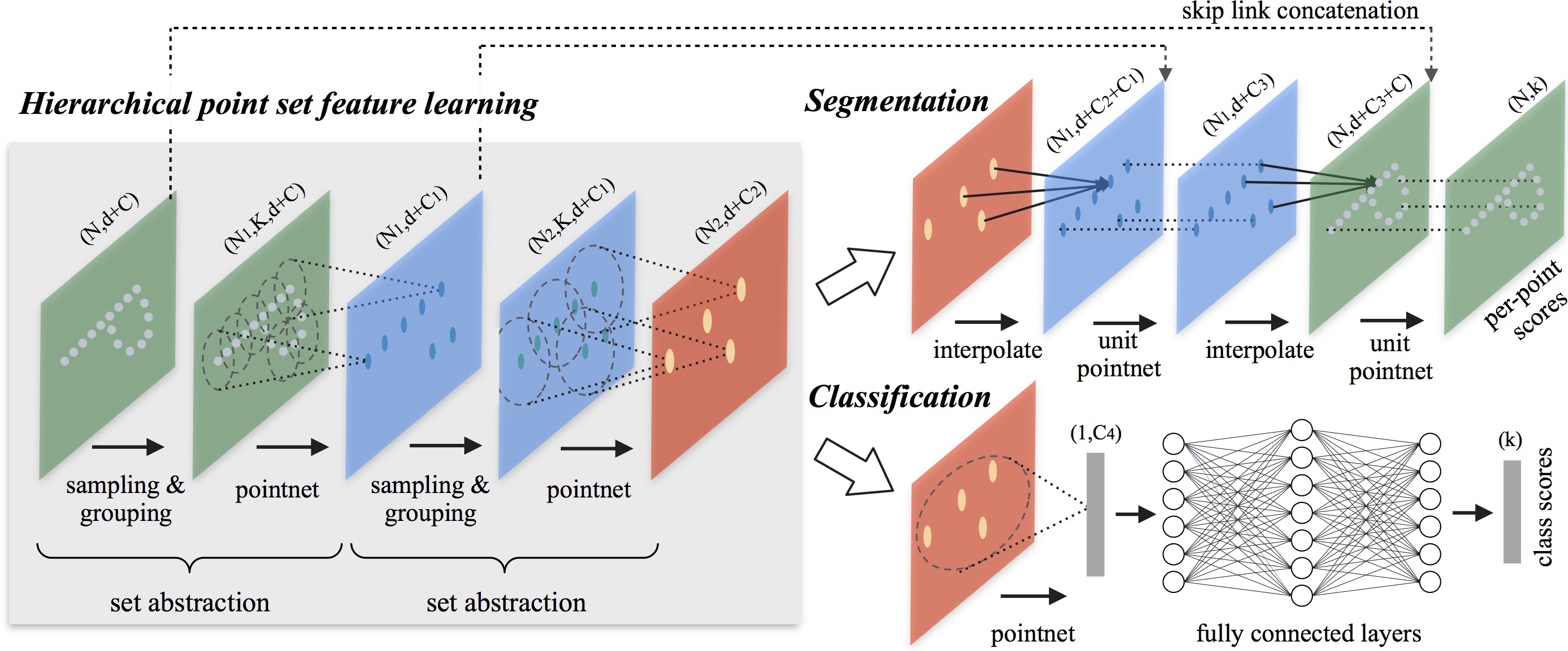

This paper introduce a type of novel neural network, named as PointNet++, to process a set of points sampled in a metric space in a hierarchical fashion (2D points in Euclidean space are used for this illustration). The general idea of PointNet++ is simple. They first partition the set of points into overlapping local regions by the distance metric of the underlying space. Similar to CNNs, they extract local features capturing fine geometric structures from small neighborhoods; such local features are further grouped into larger units and processed to produce higher level features. This process is repeated until they obtain the features of the whole point set.

This structure is mainly divided into three parts:

- Sampling layer: used to select a part of points from the input point cloud, these points are also the centroids of the local area;

- Grouping Layer: Used to select points that are “adjacent” to the centroid according to the rules of the neighborhood;

- PointNet layer: Mini-PointNet is used to encode the graphics of local areas into feature vectors.

Input: dimensions N × (d + C) , which means N points with d dimension coordinate information and C dimension features.

Output: a matrix with dimension N′ × (d + C′) , which means a new C ′ with d-dimensional coordinate information and summary local information N ′ Points.

2.Non-uniform sampling density

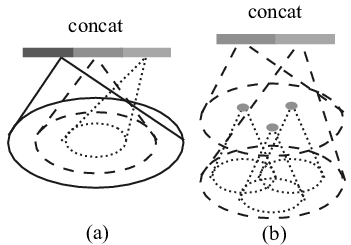

2.1. Multi-scale grouping(MSG)

As shown on the left of the figure above, at each grouping layer, each group is determined by multiple scales (set multiple radius values), and multiple features are concated after pointnet extraction of features to obtain new features.

2.2. Multi-resolution grouping(MRG)

As shown on the right of the figure above. The feature vector on the left is obtained after two set abstractions, and the radius of each set abstraction is different. The feature vector on the right is obtained by directly performing pointnet convolution on all points in the current layer. In addition, when the density of the point cloud is uneven, the left and right feature vectors can be given different weights by judging the density of the current patch. For example, when the density in the patch is small, the information obtained by the left vector is not as reliable as the features extracted from all the midpoints of the patch, so the weight of the right feature vector is increased. This reduces the amount of calculations while solving the density problem.

3. classification and segmentation

Divided into Set Abstraction layers, Feature Propagation layers, FC layers. A UNet-like structure, the code of the entire segmented network is as follows:

1 | # Set Abstraction layers |

SA module Feature extraction module: downsampling. The input is (N, D) points for N D-dimensional features, and the output is (N’, D’) for N’ points after downsampling, and each point uses the furthest point to find N’ center points. The characteristics of the N′ dimension are obtained by pointnet calculation. The previous classification network said that I won’t go into details. FP module feature transfer module: used for upsampling.

Using the inverse of the distance as the weight. This interpolation of input (N, D) and output (N’, D) guarantees that the feature dimensions of the input remain unchanged.

- 作者: Chris Yan

- 链接: https:/Yansz.github.io/2019/12/23/PointNet++/

- 版权声明: 本博客所有文章除特别声明外,均采用 MIT 许可协议。转载请注明出处!